If you’ve been reading my web technology blog, know what I’m up to or general stalking me in any other way then you’ll know I have been getting my hands dirty with hosting artwork and photography images for a number of years now. And from this experience I’ve decided to distill the following do’s and don’t list.

While this list is mainly geared towards high load situations, a lot of these points can easily be applied to sites of any scale, and you never know when your quite little blog is going to be hit by digg…

Don’t serve images via application (i.e. php etc.)

I think it’s totally cool to use an on the fly image generation script, wanna tweak all the image sizes on your mega site by 1pixel, no problem: step 1) change a line in a config file step 2) no step 2! . In fact that’s the way I generally do things.

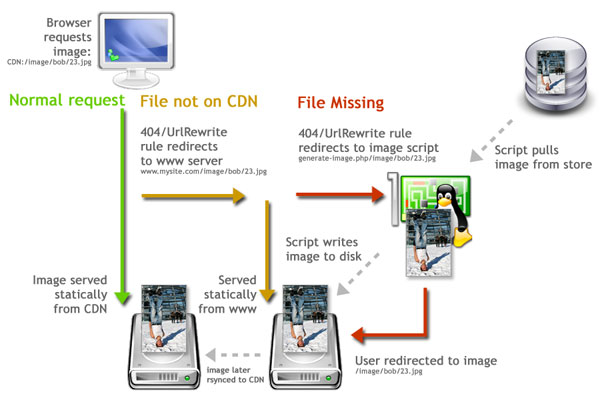

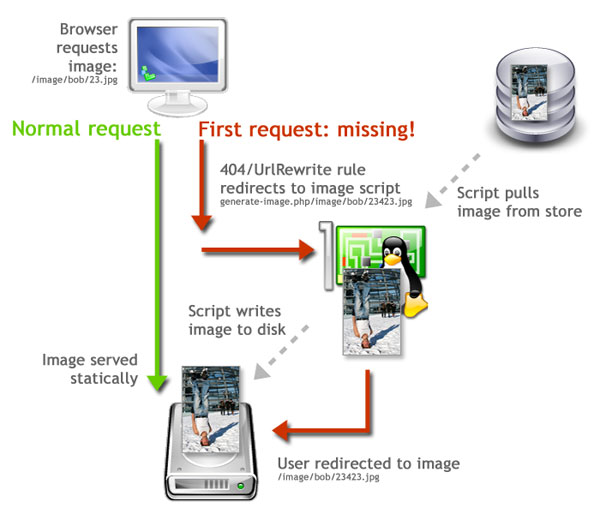

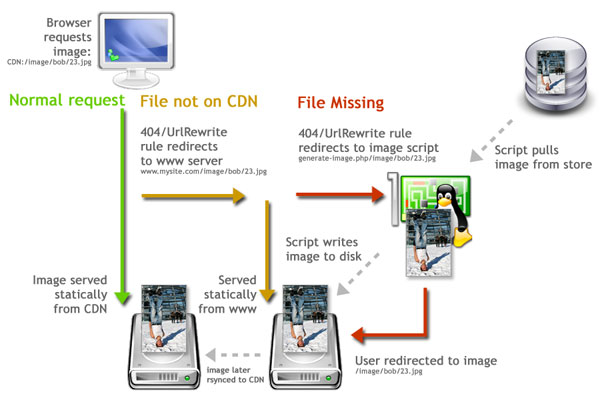

However there’s absolutely no reason why you should call this script more than one time per image. Yes I know you can handle caching in the script, but why bother? Write the images to disk and let your webserver do the dirty with caching etc. The way to do this is to ‘lazy load’ this script i.e call the script once when the first time it is requested and the rest of the time the image file is served as normal – lazy load means your server doesn’t get clogged up for hours regenerating all your images, they are only regenerated when requested. Anyway this is simple enough todo with a 404 rule or Url rewriting:

Diagram: Generate images with a script, but serve with apache (see tips below)

You can use a 404 script to send missing images, here’s how with a .htaccess file:

ErrorDocument 404 /generate_image.php

or an apache url rewrite rule:

RewriteCond $1 ^image

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteRule ^(.*)$ /generate_image.php/$1 [L]

Then in 404.php use the ‘REQUEST_URI’ server variable to figure out what the image is – hopefully you have some sort of unique key in the file name e.g.

/image/user/bob/23.jpg

and call your image generation script , here’s a brief php example (based on the 404 redirect).

//what image is this

$url = $_SERVER['REQUEST_URI'];

$bits = explode('/',$url);

if($bits[0] == 'image' && $bits[1] == 'user')

{

$user = $bits[2];

$image_id = $bits[2];

generate_image_function($user,$image_id);

//now redirect to the image etc.

}

Do use a CDN

A content delivery network helps speed up your page loads on many levels.

- Images load faster if loaded from geographical near (to the user) server

- Multiple server mean more images can load in parallel

- It can free up resources on your application server (i.e. your website), as you can reduce the number of requests to the webserver, plus there’s no need to have a the webserver load all the bells and whislte your app needs (php modules etc.)

Poor Man’s CDN

A CDN sounds a bit scary and expensive, but if you simply move your images onto another server you’ll see a lot of the benefits already. As a simple poor man’s CDN you could do the following:

- Set up few domains for images – subdomains will do images-a.mysite.com images-b.mysite.com images-c.mysite.com

- Get hold of one or more other webservers (bog standard webhosting accounts will do)

- Use your app or round robin dns http://en.wikipedia.org/wiki/Round_robin_DNS to randomly prepend your domains to your image urls e.g.

http://images-a.mysite.com/image/user/bob/97892789.jpg - Copy/rsync your /image folder to the other server(s) with a cronjob

- On the image servers have a 404/urlrewrite rule that sends the user back to the original server for images that aren’t yet copied across.

.htaccess example:RewriteCond $1 ^image RewriteCond %{REQUEST_FILENAME} !-f RewriteCond %{REQUEST_FILENAME} !-d RewriteRule ^(.*)$ http://www.mysite.com/$1 [L]

This will work even if all the domains are on the same server (but different to your app) and aliasing to the same directory, plus it sets you up ready for exansion later when you really do need to break out onto multiple servers.

Poor man’s CDN plan B – Amazon S3

Well this could perhaps be the plan A, as you’ll see in the point below S3 could act quite nicely as a CDN being a geographically dispersed set of redundant servers! I think my main concern would be the potential for the bandwidth costs to rise, they’ll be cheap enough to begin with, but it seems to me the cost scales closely with the bandwidth – if you compare 5TG monthly transfer: on S3 = $900 to dreamhost = included in basic plan.

Do host data sources (precious original images) on a third party infinite disk

If you’ve got some funky image auto regeneration going on, or you simply want to store higher resolution ‘originals’ then you’ll need a large warehouse and caretaker to look after then. Ideally multiple redundant replicated warehouses and caretakers in case one gets suicide bomber attacked etc.

When I started The Artists Web I realised storage would be the first bottle neck I’d hit, so I quitely hoped the problem would just go away. In fact it did just go away because one day I discovered Amazon S3, moreover I read on Don MacAskill’s blog that his company switched over in about a day. So did we.

Amazon S3 is essentially an infinite network disk, I won’t go in to any technical details here but it’s cheap, easy to set up and spreads your data around the world in multiple redundant locations. There’s plenty of libraries, command line tools and a few commercial services to boot.

Don’t store images in a database

When I was planning a few years back, I remember reading forum threads debating whether it was a good idea to store images in a database. The reason I liked the idea of storing images in a database is centralization of data – no need to manage image back up separately. Arguments against seemed to be more about having to do your own caching, use of resources etc. However, you can out put cache headers from your scripts, write the (thumbnail) files to the file system etc. to get round all these performance issuses.

Nice as the central backup idea is, the problem (as I learned the not so easy way) comes when you have more than a few hundred images – you have a Ghostbusters Marshmallow man crossed with a cow size database to back up. Even if you’re running backups off a replicated slave, your database is (most likely) many orders of magnitude larger than it needs to be which leads to all kinds of headaches. For instance disk space, restoring from backups or simply running a simple optimize table routine on a 100GB table is not my idea of a nice cron job (pun intended). And this feels somewhat like trying to swim with a pointless cow handcuffed to your ankle when the rest of your data is less than 48k (well, okay 480MB). There may be some reasons why ‘enterprise’ databases (oracle?) don’t mind this kind of cow sitting inside them, but I only have experience of ‘toy’ databases like mysql.

Don’t store your images in your database kids.

Do use a class/function to get image urls

Your image url schema is probably simple, something like : /images/username/size_imageid_imagename.jpg. So is it really worth having a function/class to generate urls? Well it’s certainly not going to hurt and is going to save time later on: want to shift to a CDN? substitute smaller images for mobile devices? serve the image from a different server depending on geographic location of the user?, no problem, most of these could be done with a few lines of code added to the image url class.

//normal images $image_url->get_image_url($image_id); $image_url->get_user_avatar($user_id); //mobile devices? piece of cake $image_url->set_mobile_device(); $image_url->get_image_url($image_id); //returns url to smaller image size $image_url->get_user_avatar($user_id); //ditto

Do use a config file for image sizes

If you’re following the above rule, then you’ll probably be doing this anyway:

class image_url ()

{

var $tiny_thumbnail_size = 30;

var $thumbnail_size = 200;

var $image_size = 400;

/* etc. */

}

Simply make sure you only need to specify your images sizes in one place.

That’s it (for now)

Well I’m sure I’ll be back to add some more points, in the meantime hope you find this a useful read and look forward to your feedback!